HRP-2 Musical Accompanist Robot (2009-2010)

Ever wished you had a musical partner to play with at any time? In my first project at Kyoto University, I used the HRP-2 humanoid robot along with Prima, the Yamaha Vocaloid operatic voice, to develop a singing, theremin-playing musical accompanist robot. The robot looked at my flute to detect visual gestures, and listened to my notes to synchronize the timing of its theremin-playing. It is very satisfying when a robot synchronizes with you!

I developed the accompanist in Python using Houghline detection, RANSAC, note onset detectors, and TCP/IP and NTP concepts. Base theremin mechanisms were provided by Takeshi Mizumoto. The paper won the NTF Top Paper in Entertainment Robotics and Systems at IROS 2010.

Here is a video of the HRP-2 musical accompanist. Many more music robot videos can be viewed here.

HRP-2 Expressive Robot (2010)

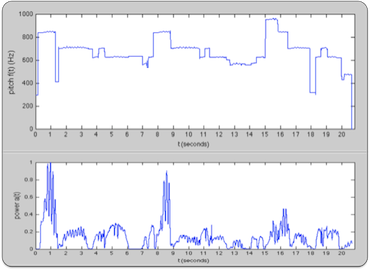

Making a robot truly expressive is a major challenge. How does one make a robot seem less - well, robotic? In this video called Programming by Playing, I programmed the robot to play with expression (vibrato, tempo, changes in pitch and power) using the curves extracted from a human flute performance. Here is the original performance for comparison. Does they sound different? Here is my poster.

What exactly is expression? According to musicologist Patrik Juslin, expression can be broken down into 5 components he calls GERMS: Generative Rules, Emotion, Randomness, Motion Principles and Spontaneity. So far, I don’t know of any robot project that incorporates all of these principles.

Thanks to the music robot project, I’ve become passionate about discovering how this elusive concept of expression works, starting with the component of Emotion.